Barrel bombs filled with poisonous chlorine gas landed on the village of Kafr Zita at 10.30 pm, when most inhabitants had gone to bed.

The attack on this village in the northern Syria was the fifth in just a week and just one of thousands of attacks on civilians during the eight-year Syrian civil war.

At least 35 residents were wounded or killed by the poisonous gas, according an investigation conducted by the Organization for the Prohibition of Chemical Weapons, OPCW.

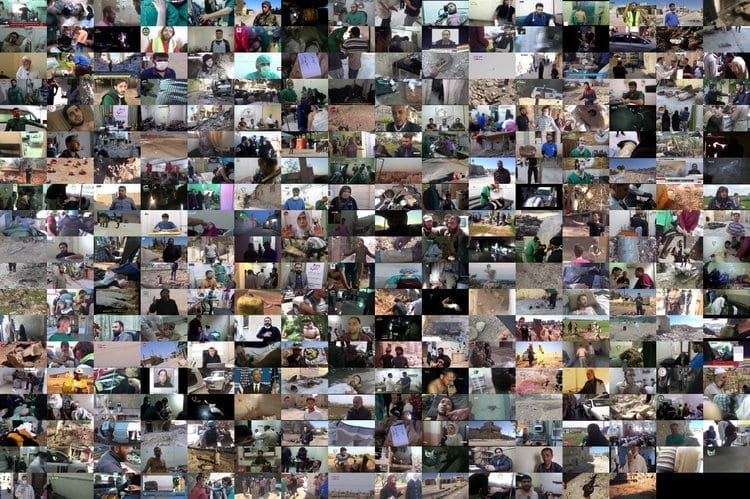

Several residents used their mobile phones to film how victims were brought to the two small hospitals of Kafr Zita, and show how doctors and volunteers desperately tried to save lives by washing their faces with water and giving victims oxygen.

The videos were uploaded to YouTube and other social media in an attempt to draw international attention and prove that war crimes were being committed by in this case president Assads regime.

But today several of the most important videos have disappeared, deleted by robots that automatically remove posts with inappropriate content.

Dying children on a hospital floor in Kafr Zita apparently belong to this category, notes Hadi al-Khatib, head of the NGO ‘Syrian Archive’, that collects and preserves evidence of war crimes and abuse civil war-torn countries such as Syria, Yemen, Libya and Sudan.

“It’s a huge problem,” he says.

“Every minute, Facebook, YouTube and Twitter are trawling their platforms, removing material that one day might be crucial to convict war criminals, and there’s nothing we can do about it. We can just try to save as much evidence as possible before it disappears,” Hadi Al-Khatib says.

The material collected by Syrian Archive are often used by media, human rights organizations and in court cases dealing with war crimes.

Lost evidence

The videos are all from the village of Kafr Zita in northern Syria, which was attacked was attacked with barrel bombs containing chlorine gas on 18 April 2014. The chemical attack was later confirmed by an fact finding commission from the Organization for the Prohibition of Chemical Weapons, OPCW. The attack was the fifth in a series of chlorine gas attacks on the city.

According to Hadi al-Khatib, a very large part of the material uploaded from the Syrian civil war has already disappeared.

And more disappears every day as the search engines used to find violent material, child pornography, etc. become increasingly efficient.

“We have ongoing contact with Facebook, Twitter and Google who owns YouTube about the problem, but despite years of negotiations it seems like they still don’t understand the problem,” Hadi al-Khatib says.

In some cases the Syrian Archive or similar NGOs have succeeded in preserving some of the important evidence. For instance from the chlorine gas attack in Kafr Zita. At least three crucial videos from the attack, that took place on the 18th of April 2014, have been removed from YouTube.

One video from Kafr Zita shows several young men wearing oxygen masks. They are located in what looks like a hospital room and seem to have trouble breathing.

The second video shows a larger group of men, women and children in a hospital. Several of them are coughing, having difficulty breathing. Later more people enter wearing gas masks.

The third video shows an unexploded chlorine gas bomb being disarmed.

Luckily the Syrian Archive managed to save the three videos in its database, where they are included as evidence of the attack. But this is not always possible.

At Facebook Scandinavia Peter Münster, head of communications, acknowledges that it is a problem if historical evidence disappears.

“We do not take down content just because it is offensive or unpleasant to look at. Vi sometimes put up a warning, but Facebook is also a platform you can use to create awareness about abuse, including violence against civilians”, he says

“We usually only take down violent content, if it is shared in order to i.e. glorify the violence. And if we take something down by mistake, you can appeal the decision and we will restore it.”

According to Peter Münster, Facebook uses artificial intelligence to trawl the huge amounts of material uploaded by the more than to billion daily users.

But later humans review the posts and decide whether or not the content is in violation of Facebook guidelines.

In the past Facebook relied on notices from its users, but that’s no longer sufficient.

“The problem is that in closed groups and forums, the users tend to agree and often they do not report each other for violating guidelines,” Münster explains.

“We have had several unpleasant eye-openers lately. We have seen how our platform has been used to distribute misinformation during the 2016 US elections and to advance persecution of the Rohingya in Myanmar.”

“These experiences have made it crystal clear to us that we have to take a much wider responsibility for the use of our platform.”

According to Peter Münster Facebook currently has more than 15,000 employees who are evaluating potentially harmful material reported by users or found by the automatic search engines.

At Twitter, spokeswoman Ann Rose Harte declined to comment on the allegations that the service deletes posts that e.g. can be used as evidence in war crimes cases.

But in general Twitter exists to serve the public conversation, including the conversation about important political events, shes says.

But it has to happen within the limits of Twitter guidelines

“Our guidelines prohibit terrorism, hateful behavior, manipulation of our platform and abuse”, Ann Rose Harte says in an email to Danwatch.

“We enforce our guidelines in the same well-considered and impartial way for all our users. And we work with the authorities and assist with investigations when required by law, ” Ann Rose Harte states.

Google who is the owner of YouTube communications manager Jesper Vangkilde does not want to be quoted in this article.

However, on its official blog, Google has commented on the decision to use robots to remove problematic material from YouTube.

“While these tools are not perfect and not applicable in all situations, our systems have proven to be more accurate than humans when it comes to stopping videos that should be removed,” Google wrote a couple of years back.

In response to questions from the Danish Daily Information Google has urged users to make sure the proper context is clear when uploading videos that risk being taken down because of violent content.

In that way evaluators have a better chance of assessing whether a video should be preserved or removed, a Google spokesman wrote in a response to the newspaper.

At Syrian Archive, however, Hadi al-Khatib is not reassured.

He believes that Facebook, Twitter and YouTube should use more resources to make sure that humans are reviewing the material that the robots propose to remove.

“It should be people who speak the language and understand the context, who evaluate the individual notices. It is the only way you can ensure a proper decision whether a posting should be removed or not, ”he says.